Welcome back to Currents, a monthly column from Reimagine Energy dedicated to the latest news at the intersection of AI & Energy. At the end of each month, I send out an expert-curated summary of the most relevant updates from the sector. The focus is on major industry news, published scientific articles, a recap of the month’s posts from Reimagine Energy, and a dedicated job board.

1. Industry news

Is infinite energy all we need to reach AGI?

The last weeks of 2024 have been hectic. Let’s look at the major announcements in the space over the past month:

OpenAI announced a $200-per-month ChatGPT Pro subscription which gives access to o1 pro mode, a version of o1 that uses more compute to think harder and provide even better answers to the hardest problems.

Text-to-video generators: OpenAI’s Sora was released for ChatGPT Plus subscribers in some regions. Google announced the release of rival product Veo2.

Google also released Gemini 2.0 Flash Experimental. I’ve been playing with this latest version, and the outputs were, most of the time, on par with o1. Since Gemini is free, it might be the best option if you don’t want to pay for a subscription right now.

Gemini 2.0 will also power Project Mariner, an agent that can take control of your browser to search for information, fill out forms, and even shop.

Microsoft released Phi-4, a 14-billion-parameter small language model (SLM), that specializes in complex reasoning and math.

Amazon announced Amazon Nova, a new family of foundation models that can handle text, images, and videos. They claim that the Nova family will be about 75% cheaper than competing models.

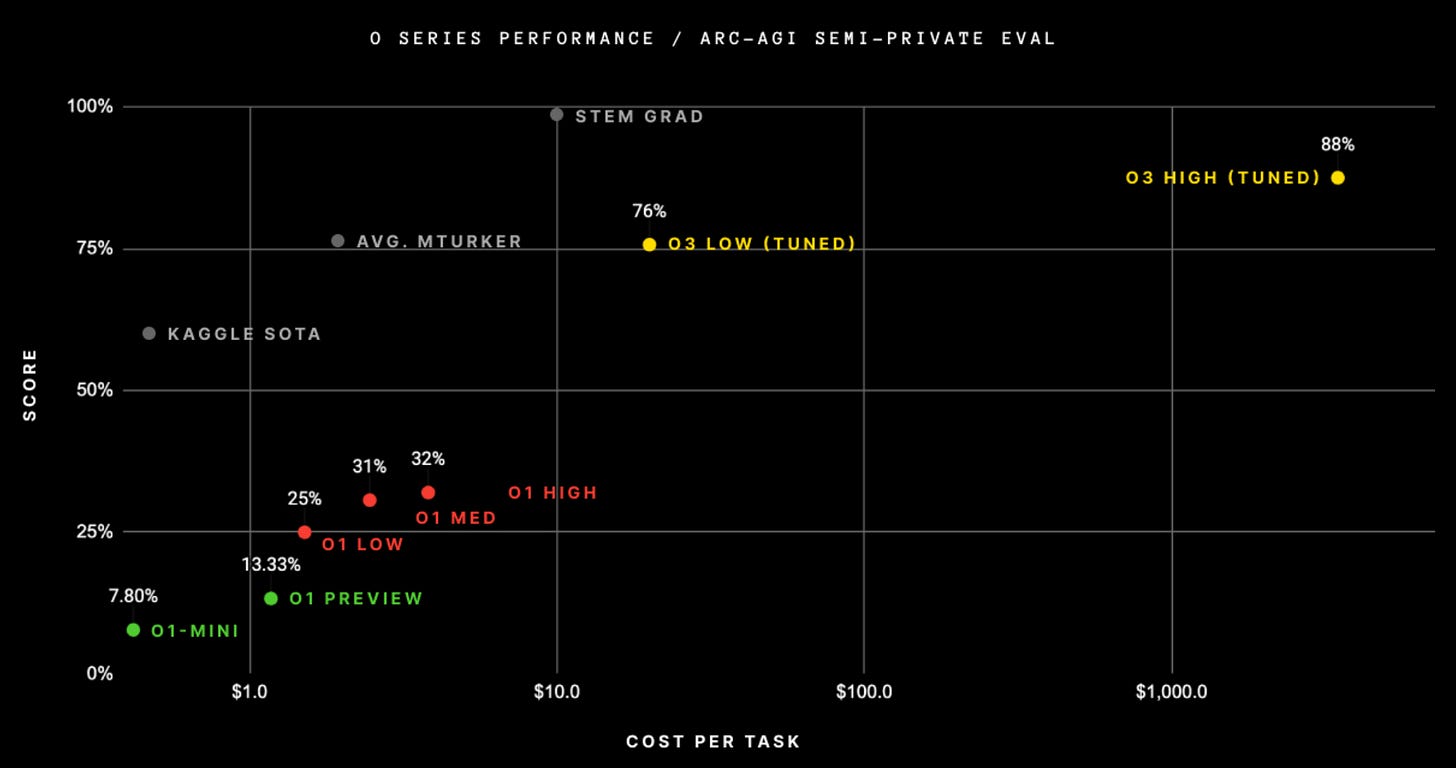

But what caught everyone’s attention was the announcement of OpenAI o3 and the impressive results the model achieved on the ARC-AGI benchmark.

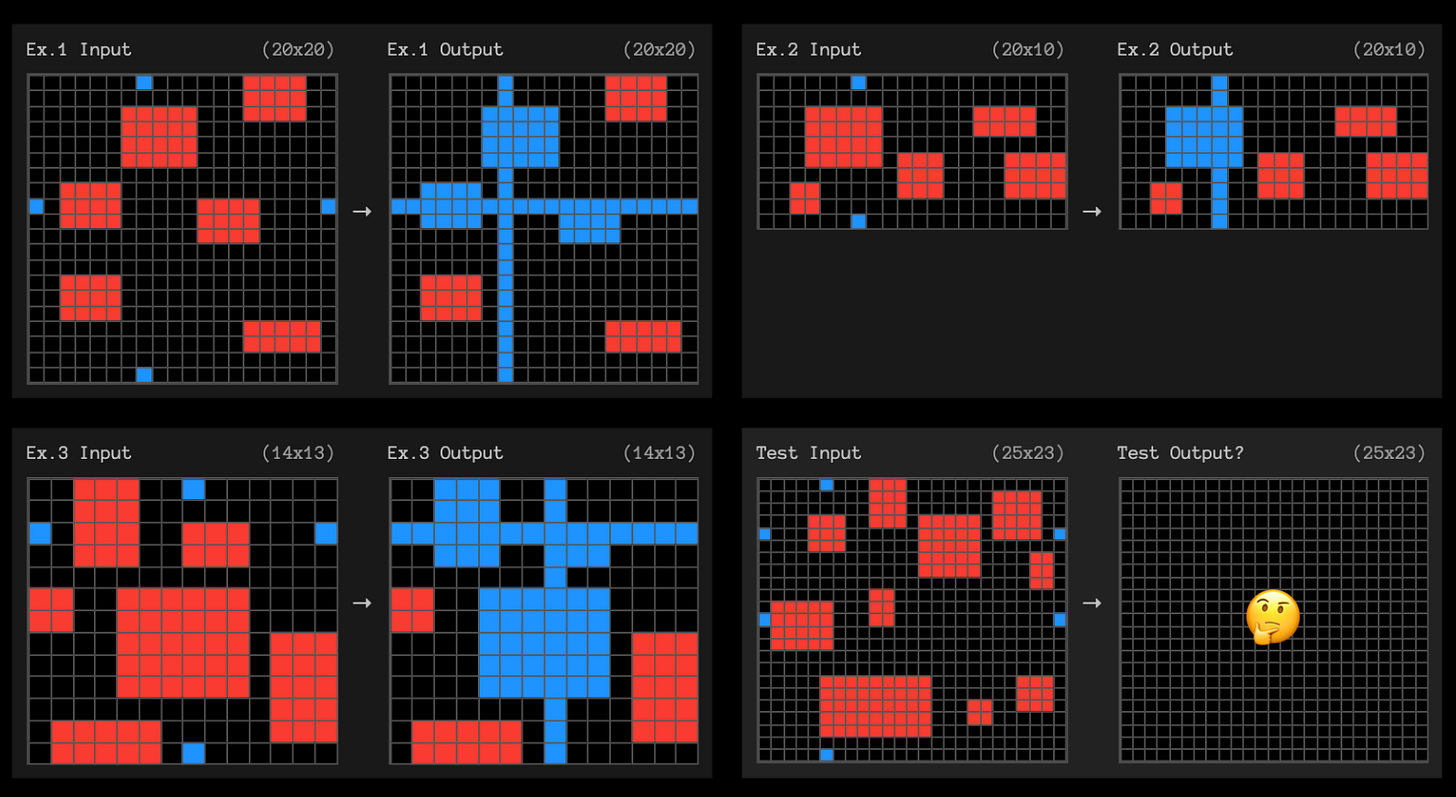

The ARC-AGI is a benchmark designed to assess an AI’s ability to show adaptive general intelligence by solving tasks that are easy for humans but tough for computers. It involves visual puzzles and tasks that require understanding basic concepts like objects, boundaries, and spatial relationships.

OpenAI’s o3 model scored 87.5% on this benchmark, surpassing previous records and achieving performance comparable to human-level intelligence (85% average). For reference, GPT-4o could only achieve 5% on this benchmark at the beginning of 2024. This has been celebrated as a fundamental leap toward Artificial General Intelligence (AGI).

How did o3 achieve such a major improvement? o3 leverages Chain of Thought (CoT) prompting, which encourages models to articulate their thinking by solving problems step by step. o3 then solves tasks generating thousands of Chain of Thoughts (CoTs) and, at test time, searching across them to find the steps needed for the final answer.

This also results in very long compute times and high cost for the model. According to OpenAI, solving each of the ARC-AGI tasks using o3 cost around $3,400 per task, which covers computing, searching, and evaluating possible answers at inference time. This led to total spending of $1.6 million for the entire benchmark.

What I’m thinking

Humans can solve these puzzles in minutes (how long did it take you to solve the one above?). There is a considerable difference between actual intelligence and a system that’s essentially trying every possible reasoning path until it stumbles on the right one.

What we really want is a system that naturally adapts, reasons abstractly, and does so as effortlessly as humans do. Current large language models (LLMs) show some remarkable capabilities: they can appear to reason, solve specialized tasks, and significantly boost productivity in coding and content creation. But they’re still missing crucial human-like qualities such as genuine understanding of logic, or the ability to create knowledge and ideas that were not already embedded in their training set.

Is, then, infinite energy all we need to achieve AGI? LLMs keep improving, and their parent companies are pushing them to their limit to remain relevant and justify their astronomical valuation and cloud costs. Still, my opinion right now is that it’s very unlikely that we’ll reach general intelligence by employing the same architectures and just throwing in more compute time.

What does o3 mean for our energy system?

Even though AGI likely won’t happen this way, companies like OpenAI will keep scaling compute at the inference level. With o3, aside from pre-training compute costs, the cost for each task completion will rise. This will push data center energy demand even higher.

How will we power all of this? We’ll likely need a mix of renewables, batteries, natural gas, and nuclear. Above all, we’ll need to invest heavily in expanding and modernizing our electricity grids.

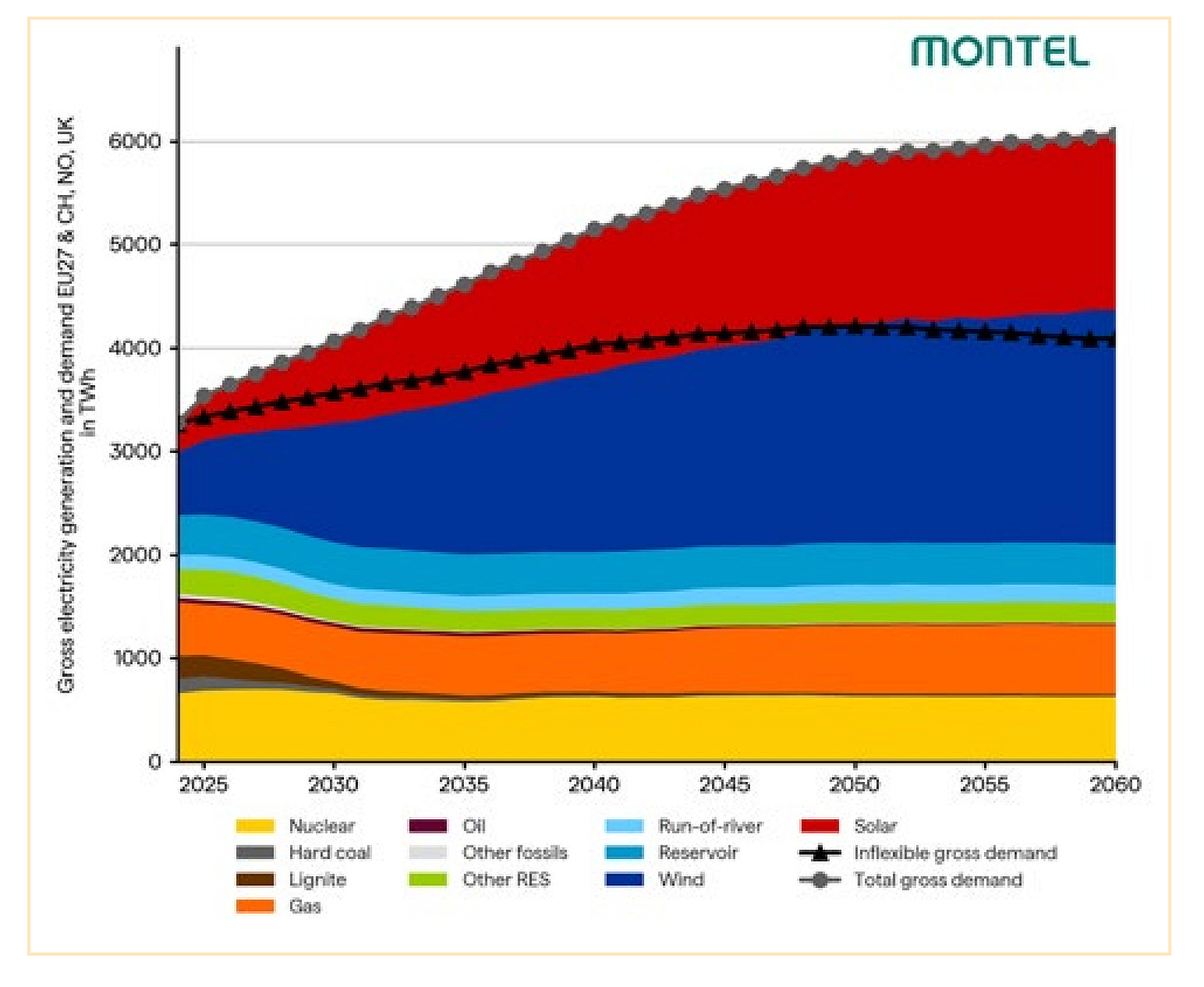

In the image below, we can see one of the forecasts for the generation capacity in Europe from the Montel EU Energy Outlook 2024, including the total gross demand and inflexible demand.1

Let’s look more closely at the different elements:

Electricity Grid expansion

In the UK, £77 billion were recently committed to expand the electricity grid. This will gradually happen everywhere, as most existing grids are obsolete and undersized when considering the growing electricity demand.2 Analysts say we’ll need to invest about $3.1 trillion for grid expansion and modernization.3

Renewables

After years of surprising (and largely unpredictable) solar installation growth, the EU Market Outlook for Solar Power4 showed a considerable slowdown in the EU in 2024. This might be the new normal for a while—unless we see some major breakthroughs in storage solutions that allow to increase the capture rate.

Germany experienced another Dunkelflaute in December, with power prices shooting up to over 800 €/MWh. The interconnection with the Nordics caused Denmark, Sweden and Norway to also experience these extremely high prices. Germany’s Energiewende is being blamed for recent spikes, due to its nuclear phase-out in favor of high renewable capacity.5

Batteries

Energy storage prices have dropped significantly over the past few years, causing a boom in battery deployments. In the U.S., the grid added battery capacity equivalent to 20 nuclear reactors in just the past four years.6 Meanwhile, in the EU, installed energy storage capacity tripled in 2024 compared to 2023.7

Nuclear

Big tech companies ramped up nuclear investments through the end of 2024.8 Signing deals for power from refurbished plants can make economic sense, but building new ones from scratch takes a long time, and the economics are still unclear. Small modular reactors (SMRs) have been the cool new kid on the block for a while, but they haven’t yet proved they can operate at a competitive price, so they’re unlikely to be a short-term solution.

What I’m thinking

Planning the energy system of the future is a complex task that involves making decisions under many uncertainties. Energy policy planning for governments and utilities is even more complicated because these issues are getting very political: right-wing parties often lean toward keeping fossil fuels to avoid harming the economy, and left-wing parties push for more renewables and clean tech. My current analysis on the energy supply mix for the coming years is this: renewables will get cheaper and cheaper, so we’ll install more wind and solar as it makes the most economic sense. Their intermittency can be problematic, but batteries and equipment that respond to price signals should fix most of these problems. In the long term, baseload will mainly come from nuclear. In the short term, we’ll likely keep using natural gas. This is a complex and fascinating topic. I’m working on a comparative analysis of different energy sources, so stay tuned for that article coming in early 2025.

More AI-driven deals in the energy sector

Trane Technologies will acquire Brainbox AI in a first-of-its-kind deal of this scale in the sector. We already covered Brainbox AI in previous issues, when we discussed the release of its autonomous assistant, ARIA. Brainbox will join Trane keeping its team intact, but leveraging Trane’s partnerships and existing digital building management technology to grow adoption.

In Europe, more funding rounds for companies working on demand-side flexibility were announced:

Belgian Powernaut raised €2.4 million pre-seed to accelerate Virtual Power Plant adoption.

Swiss Hive Power raised €3.5 million seed round to scale its AI-based software for optimising electric vehicle charging.

Belgo-American NOX Energy raised $1 million to integrate smart devices into the energy flexibility market.

What I’m thinking

Brainbox AI’s acquisition is a new milestone in the sector. Although incumbents like Schneider Electric have been acquiring smaller climate-tech startups for years, Brainbox stands out with over 190 employees and a Series A and B that totaled $60 million. On one hand, this deal confirms a strong market demand for AI-powered HVAC control; on the other, it illustrates that even the strongest players in the market might still rely on larger companies for long-term survival.

Meanwhile, recent EU funding rounds reveal that with Europe’s volatile electricity prices, flexibility is the new hot topic for VCs. It’ll be interesting to watch how these companies develop their products. The market exists, and regulation is slowly getting there. Ultimately, success will depend on effective product design, which is the hardest and most fascinating aspect!

2. Scientific publications

An interpretable data analytics-based energy benchmarking process for supporting retrofit decisions in large residential building stocks. The authors analysed a dataset of 49,000 Energy Performance Certificates (EPCs) from the Piedmont region in Italy. They used it to estimate and benchmark primary energy demand for space heating and domestic hot water. Different machine learning techniques were used to extract insights from the data. An additional Explainable AI (XAI) layer explained the results to non-experts.

What I’m thinking

EPCs are publicly available datasets that haven’t been widely analysed with machine learning. At Ento, we’re also working with thousands of these EPCs for our Danish clients. Having access to both the EPCs and the real consumption data from the buildings, we can identify and try to explain the differences between the theoretical and actual energy performance. The approach from the paper is thorough and worth investigating further. One issue we’ve found, when working with EPCs, is the inconsistent data structure among different regions and countries, and the lack of easy access through an API.

How long is long enough? Finite-horizon approximation of energy storage scheduling problems. In this math-heavy paper, the authors examine how most real-world energy storage system schedulers use a finite rolling horizon to manage what is fundamentally an infinite-horizon problem. They derive a lower bound on how long the horizon must be, based on the technical characteristics of the storage system. They also give an upper bound on how “bad” the solution can get if the horizon is too short.

What I’m thinking

As mentioned earlier, storage systems are becoming vital pieces in our energy infrastructure, and optimally scheduling them is a complex challenge. These highly mathematical problems are perfect candidates for tools like o1 Pro. In 2025, we’ll likely see more of these solutions in deep scientific research. To be sure: this doesn’t mean we won’t need researchers and domain experts anymore. They’re the only ones who can understand an LLM’s output and verify whether it’s valid. As LLMs reach PhD-level capabilities, research will accelerate, and the level required to do meaningful work will likely increase.

3. Reimagine Energy publications

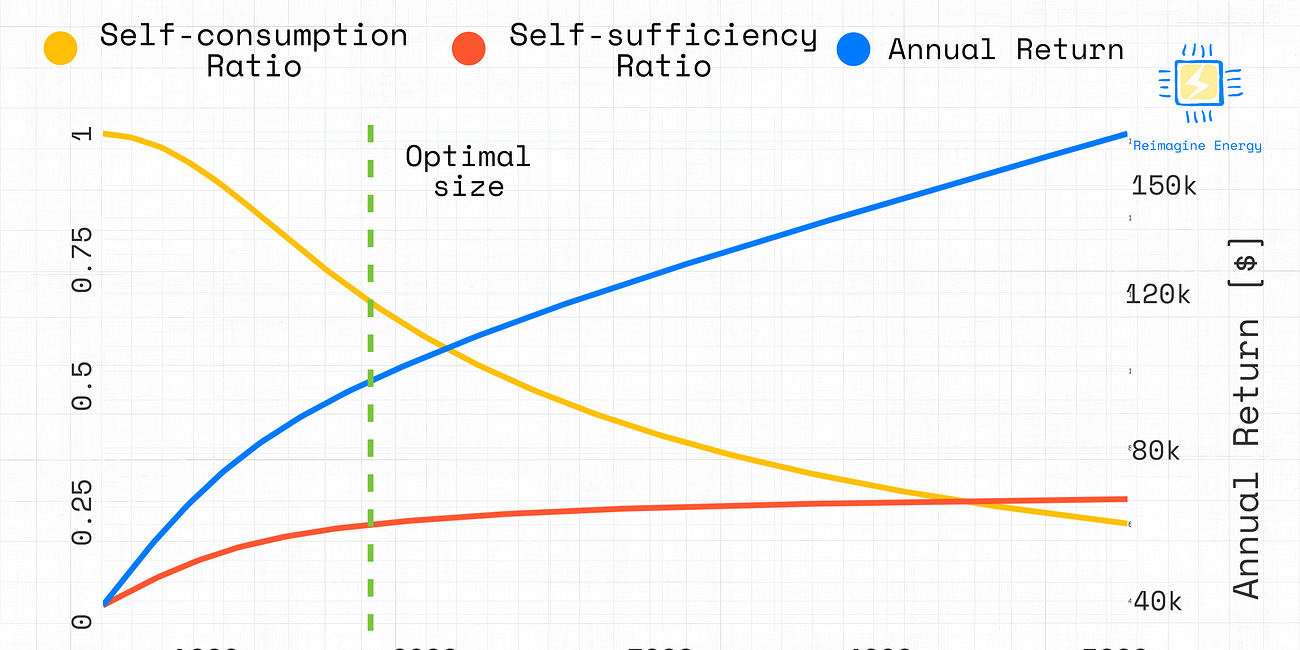

Check out my latest tutorial to detect the optimal size of a rooftop solar PV system using Python.

4. AI in Energy job board

This space is dedicated to job posts in the sector that caught my attention during the last month. I have no affiliation with any of them, I’m just looking to help readers connect with relevant jobs in the market.

Data Scientist at Aurora Energy Research

Chief Technology Officer at myenergi

Data Scientist at NOX Energy

Marie Curie Industrial PhDs at UPC

Conclusion

With so much going on in the sector, it’s not easy to follow everything. If you’re aware of anything that seems relevant and should be included in Currents (job posts, scientific articles, relevant industry events, etc.), please reply to this email or reach out on LinkedIn and I’ll be happy to consider them for inclusion!

https://info.montelgroup.com/hubfs/Montel-Energy%20Outlook%202060%20Q4.pdf

https://www.theguardian.com/business/2024/dec/18/energy-firms-rewire-great-britain-electricity-grid

https://www.rystadenergy.com/news/power-grids-investments-energy-transition-permitting-policies

https://www.solarpowereurope.org/insights/outlooks/eu-market-outlook-for-solar-power-2024-2028

https://www.yahoo.com/news/sweden-sees-red-over-germanys-053017355.html

https://www.theguardian.com/environment/2024/oct/24/power-grid-battery-capacity-growth

Regarding the scheduling problem horizon publication, these are precisely the types of problems that LLMs can't work on. They can't generalize, understand logic, derive solutions, or propose theorems (most PhD candidates can't either).

Wolfram Alpha is closer to that than any LLM running around on interpolated data. Optimization solvers are better at making decisions and could be interpreted as a higher level of artificial intelligence. Of course, it is not my idea, but Prof. Powell's (https://castle.princeton.edu/the-7-levels-of-ai/)

Anyway, my two cents.

As always a great read.

Bests,

Dario, PhD candidate