DeepSeek, Jevons, Stargate, and cognitive offloading

Currents: AI & Energy Insights - January 2025

Welcome back to Currents, a monthly column from Reimagine Energy dedicated to the latest news at the intersection of AI & Energy. Every last week of the month, I send out an expert-curated summary of the most relevant updates from the sector. The focus is on major industry news, published scientific articles, a recap of the month’s posts from Reimagine Energy, and a dedicated job board.

Thanks for reading Reimagine Energy! Subscribe for free to receive new posts and support my work.

1. Industry news

DeepSeek and LLM democratization

This month’s headlines have all been about DeepSeek, the latest LLM from China that caused panic on Wall Street and generated widespread discussion in the tech community. Here’s brief summary of what DeepSeek is:

DeepSeek is an open-source large language model developed by a Chinese research lab that is backed by a major quant/hedge fund. It comes in two flavors:

V3: A pretrained model that nearly matches the performance of some U.S. frontier models at a fraction of the cost.

R1: A “reasoning” model that, via reinforcement learning and techniques like Group Relative Policy Optimization, shows chain-of-thought capabilities comparable to early OpenAI models (like o1).

The DeepSeek team employed advanced efficiency engineering (e.g., optimized key–value cache compression, mixture-of-experts architectures)1 to squeeze more performance out of less expensive, lower-grade chips (e.g. Nvidia H800s vs the more recent H100s employed by leading AI companies), demonstrating that high-quality LLMs can now be trained with dramatically lower compute and monetary costs.

So why is DeepSeek such a big deal?

Geopolitics: DeepSeek is the first non-U.S. model to come close to state-of-the-art U.S. systems on key benchmarks at a much lower training cost. This challenges the long-held assumption that massive (and expensive) infrastructure is needed to build competitive LLMs. It also raises concerns about the impact of U.S. export controls on advanced chips.

AI ecosystem impact: It comes with open weights, released under a permissive MIT license, which has already spurred a flood of derivatives on platforms like Hugging Face2 and may end up leveling the playing field.

Business implications: Nvidia stock fell 17% this week amid doubts about whether large-scale investments in compute are actually required. The competitive moat of companies like OpenAI and Anthropic was also questioned.3

Energy efficiency: DeepSeek’s training cost and energy use are lower, although widespread deployment and increased accessibility could trigger a rebound effect where greater efficiency drives a surge in overall energy consumption.

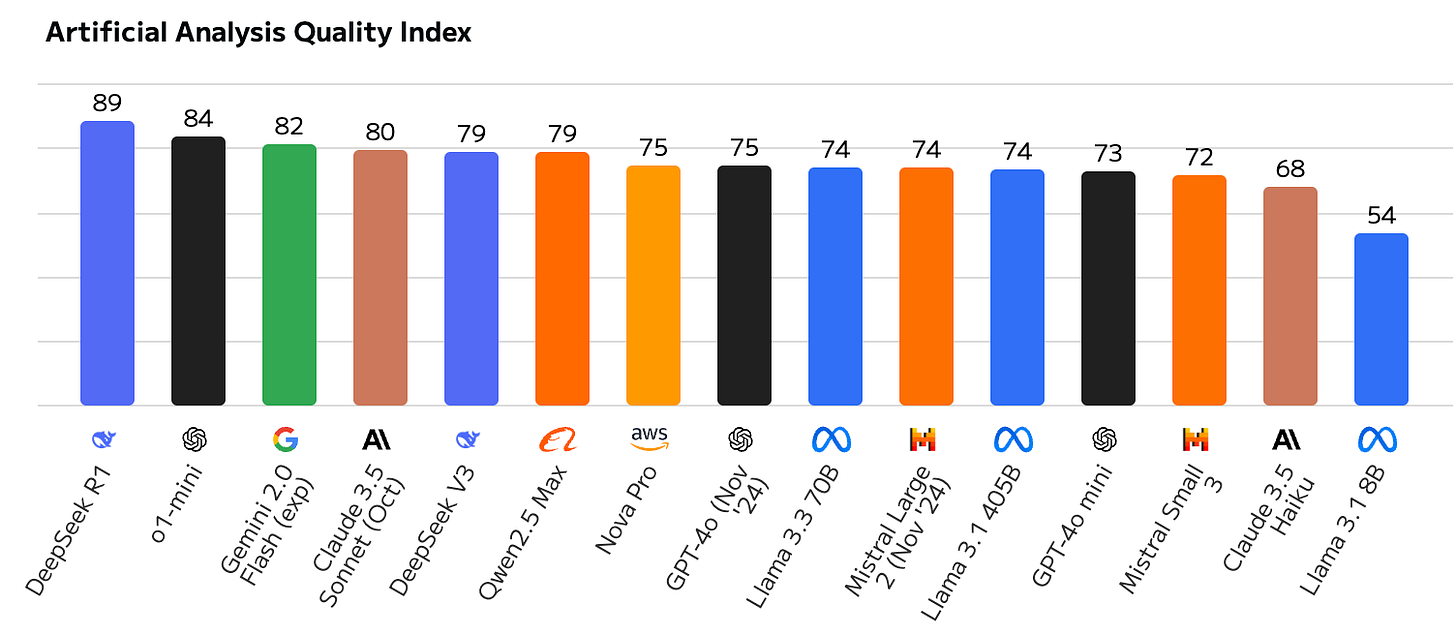

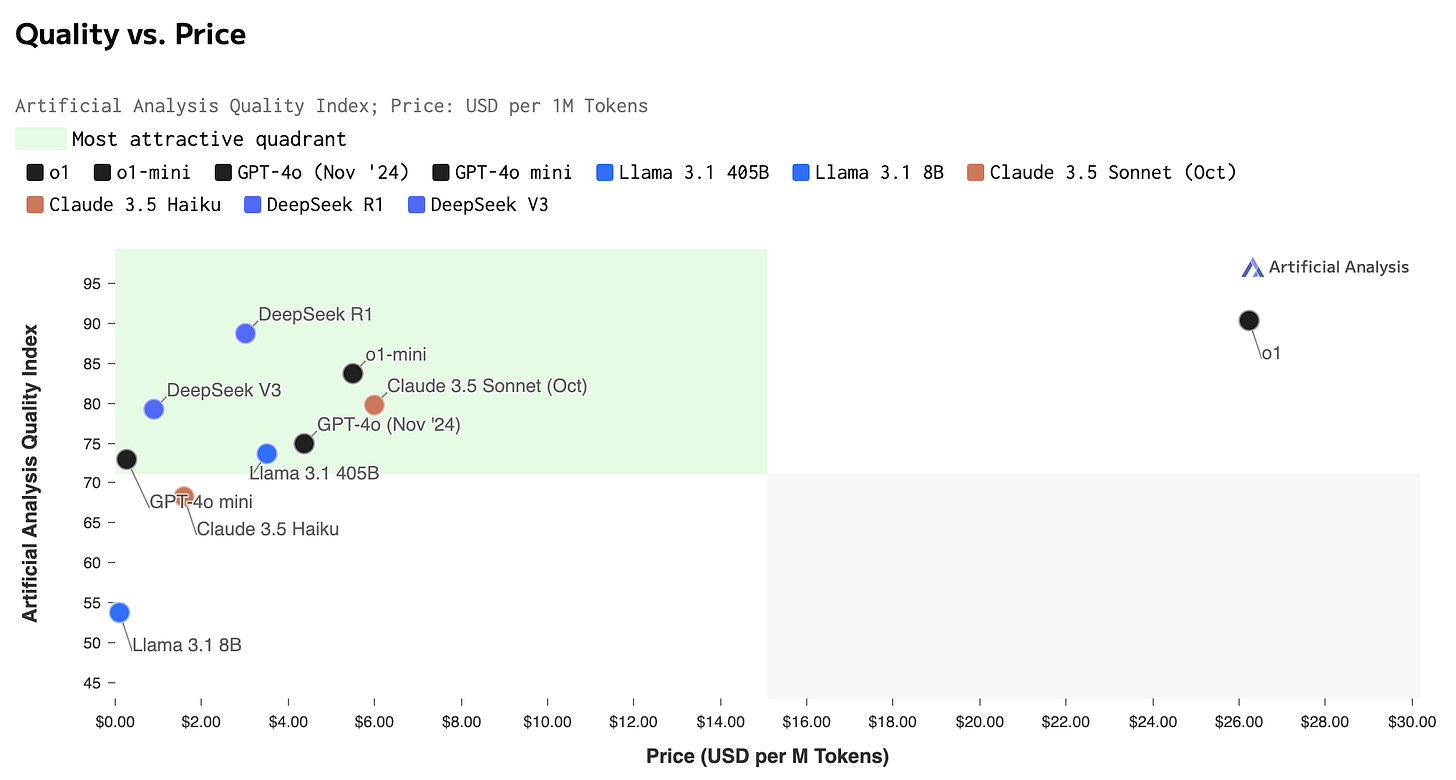

LLM quality vs price comparison from Artificial Analysis, find the methodology here.

What I’m thinking

These are the most interesting consequences from the DeepSeek release in my opinion:

More competition in the field means better and cheaper options for the end-user. A couple of weeks ago we had to pay a subscription to work with reasoning models. Today we get OpenAI o3-mini, DeepSeek, Microsoft Copilot, and the Google AI Studio Flash Thinking models, all for free.

Lower infrastructure and training costs push us in the direction of having commoditized models. The rest of us can then focus on building apps that provide value on top of such models (the so-called “wrappers”). When everyone has access to equally powerful models, the real competitive advantage lies in deeply understanding the unique problems that we’re trying to solve with AI.

If I had to pick one technical innovation from the DeepSeek paper, it’s that the model learned to reason on its own, using a variant of reinforcement learning called Group Relative Policy Optimization. Companies building chat interfaces for LLMs have widely applied Reinforcement Learning from Human Feedback to tune the the model’s behavior by showing it good examples of user interaction. DeepSeek seems to have taken the humans out of the loop.

The obvious upside of a more efficient model is its reduced cost and energy use, but this deserves its own analysis. I’ll cover that in the next paragraph.

Nadella, Jevons, and more efficient AI

Last Sunday, probably in an attempt to shield Microsoft from the upcoming Wall Street bloodbath, Satya Nadella tweeted about how more efficient models will lead to increased AI adoption. To make his point, he cited the Jevons paradox.

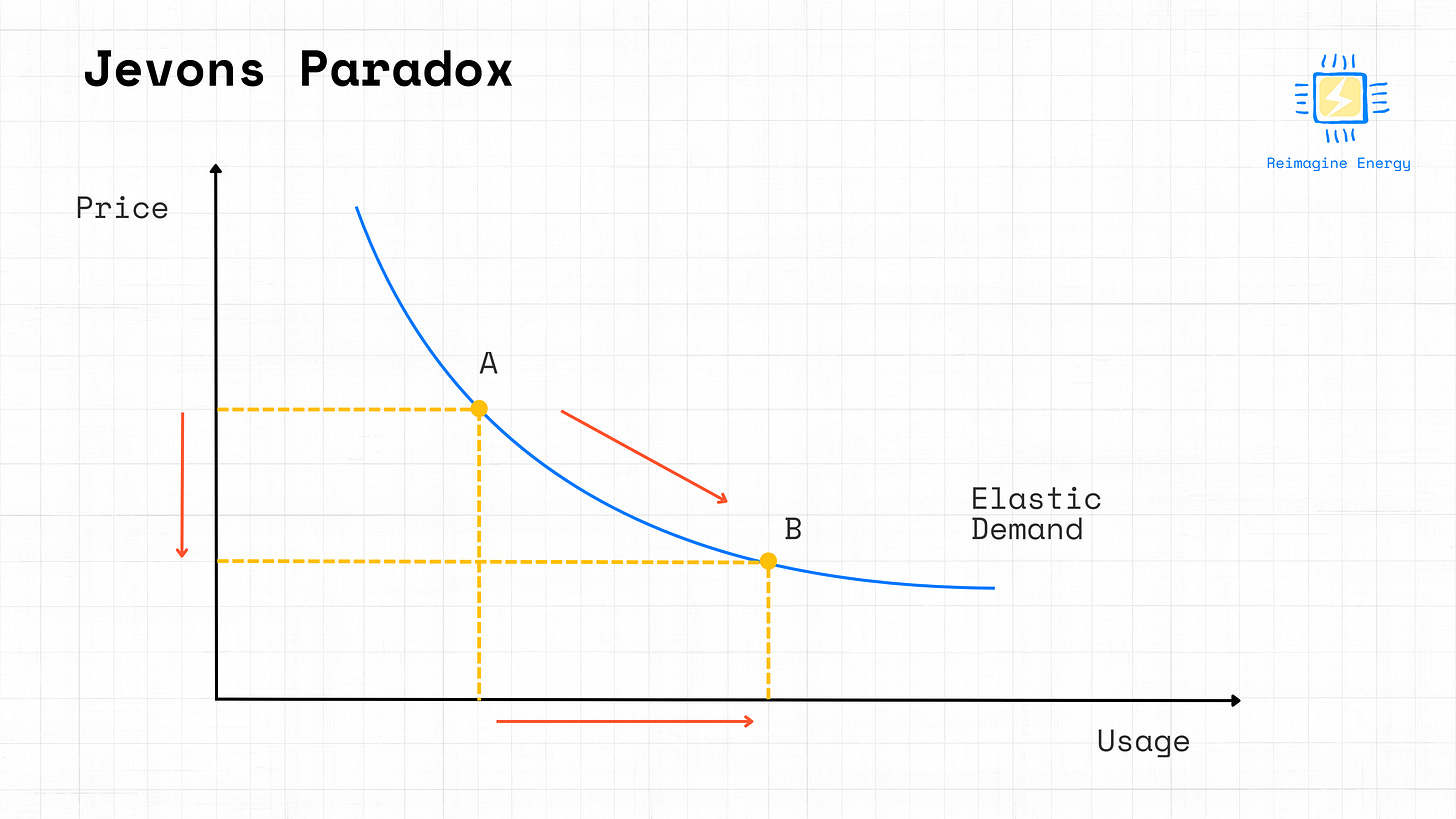

What is the Jevons paradox?

William Stanley Jevons was an English economist who, among other things, studied the impact of Britain’s coal supplies on the British economy. In his 1865 book “The Coal Question”,4 he writes:

It is a confusion of ideas to suppose that the economical use of fuel is equivalent to diminished consumption. The very contrary is the truth.

Jevons observed that fuel efficiency improvements in steam engines (and later, in other energy technologies) did not lead to lower coal consumption. Instead, as the fuel became cheaper per unit of work, its usage increased—sometimes even exceeding the original consumption levels.

The concept has been later expanded to: “Increased efficiency lowers the cost per unit of energy service, which in turn can stimulate demand and ultimately lead to higher total energy use.”

Does it make sense then to explain increased AI use with Jevons’ ideas?

More efficient AI training and inference leading to increased AI adoption doesn’t seem related to the Jevons paradox. It’s actually what you’d expect from a technology that gets cheaper and better—it will be used more.

Still, the Jevons paradox could be applied to the potential increased electricity use that would be generated from more efficient (hence cheaper and more accessible) AI use. This is what different scientists in the field have been discussing and trying to understand—this paper provides a good overview.

But does this imply negative consequences for humanity?

What I’m thinking

Let’s take a step back. Most of us want a world with lower emissions, but why is that? We observed that emitting uncontrolled amounts of greenhouse gases into the atmosphere could lead to catastrophic environmental consequences. This could potentially lead to an unrecoverable collapse of civilization or even the complete extinction of humanity.

Indeed, the extinction of humanity would be an easy way to reach “Net Zero”. But is that what we want? No. Arguably the final goal of those concerned with decarbonization is for human society to flourish in a sustainable and resilient way.

Electrification, mechanization of agriculture, rail transport, etc. all lead to an increase in greenhouse gas emissions in the past. Would we go back and give up on these advancements? Probably not.

I believe we’re in a similar place now with AI.

Will smarter and more efficient AI models increase global electricity consumption? Possibly. Does this mean humanity is better off by not developing AI or not making it more efficient and accessible? I don’t think so. The challenge is to develop a prosperous energy-rich civilization while also avoiding climate catastrophe.

Trump’s first weeks in office

Donald Trump was inaugurated as the 47th U.S. president on January 20th, with a display of his big tech minions seated in the first row.5 Here’s an overview of Trump’s policy initiatives on Energy and AI during his first 15 days:

Declared a national energy emergency to ease environmental restrictions, enabling rapid expansion of fossil fuel projects.

Officially withdrew from the Paris Agreement, taking distance from global climate commitments.

Rolled back clean energy initiatives, including EV tax credits, offshore wind leasing, and clean hydrogen funding.

Issued orders to expand fossil fuel production by resuming offshore and Arctic drilling, fast-tracking LNG export permits, and replenishing oil stockpiles.

Announced major projects (notably the “Stargate Project”) with hundreds of billions of dollars allocated to build state-of-the-art AI data centers and infrastructure in the U.S.

Launched public-private partnerships with industry giants such as OpenAI, SoftBank, Oracle, and Microsoft to secure U.S. leadership in AI, aiming to create hundreds of thousands of jobs and enhance national security.

What I’m thinking

The rapid growth of AI adoption is projected to double or triple data centers’ electricity demand by 20286. Under Trump’s energy policies, the most immediately scalable solution to increase electricity supply is natural gas. Gas power plants are quick to deploy and can provide the reliable baseload power required by data centers. While natural gas burns cleaner than coal, it still emits substantial amounts of carbon dioxide and faces other challenges like methane leakage.

Relying heavily on natural gas will drive up emissions and set back the U.S. in the global race for clean energy. A focus on fossil fuels might, in the short term, “Make America Great Again”. In the long term, this is likely to undermine competitiveness in the emerging green economy, erode U.S. influence in global climate negotiations, and delay the global transition to a sustainable, low-carbon energy system.

2. Scientific publications

Transfer learning in building dynamics prediction and A scalable approach for real-world implementation of deep reinforcement learning controllers in buildings based on online transfer learning: The HiLo case study

These two papers look at how transfer learning (TL) can be used to boost the performance of AI-based HVAC control systems. The first study compares six TL strategies for deep neural network models that predict building dynamics—showing that selectively fine-tuning the decoder layers improves predictive accuracy. The second paper demonstrates a real-world online transfer learning approach for deep reinforcement learning (DRL) controllers. Pre-trained in simulation via digital twins and then transferred between offices, the method combines imitation learning and fine-tuning to outperform traditional rule-based controllers and DRL models trained from scratch.

What I’m thinking

I’ve followed advances in transfer learning applied to buildings ever since I started working in this field because they address one of the key issues in data-driven solutions: the lack of data. With more than 30,000 buildings already on the Ento platform, we’re excited about the potential to build a “hive mind” where data from different buildings can be leveraged to continually optimize operations and reduce energy waste globally.

Agent Laboratory: Using LLM Agents as Research Assistants presents a fully automated, multi-agent research pipeline where specialized LLM agents collaborate to different tasks from literature review and experimental design to coding and report generation, thereby cutting research costs dramatically. In a complementary fashion, Hallucination Mitigation Using Agentic AI Natural Language-Based Frameworks tackles one of the most persistent challenges in generative AI by orchestrating multiple agents that iteratively review, fact-check, and refine content, with novel metrics to quantitatively track the reduction in hallucinations.

What I’m thinking

Everyone’s talking about AI agents as a key trend for 2025. Amid the inevitable noise, these two papers show two tangible applications: using agents to accelerate research and reduce hallucinations. Despite the impressive progress, I believe that having a human expert with critical thinking in the loop is still the key to ensure the outputs are reliable and truly meaningful. More on that in the next section.

3. Reimagine Energy publications

I was away most of January, so I didn’t get a chance to write a Reimagine Energy post. But I did have time to read, so here’s some of the content I loved and I think is worth sharing:

Nat Bullard’s annual presentation on the state of decarbonization is a must-read for everyone interested in the topic. Some of my favourite slides are 3, 77, 89, 95, and 171.

Humanity’s Last Exam: this benchmark on which LLMs still perform poorly, feels more representative of a reality in which I still can’t offload the majority of my daily tasks to AI.

I’ve been thinking a lot lately on the impact of AI on writing and human critical thinking7, and I enjoyed reading these Substack posts on the topic:

4. AI in Energy job board

This space is dedicated to job posts in the sector that caught my attention during the last month. I have no affiliation with any of them, I’m just looking to help readers connect with relevant jobs in the market.

Data Engineer at Hypercube

PhD position in physics-informed data-driven techniques for supporting the energy transition of European cities at Cimne/UPC

Artificial Intelligence Engineer at MeteoSim

AI Energy Engineer at EKORE Digital Twin Solution

Conclusion

With so much going on in the sector, it’s not easy to follow everything. If you’re aware of anything that seems relevant and should be included in Currents (job posts, scientific articles, relevant industry events, etc.), please reply to this email or reach out on LinkedIn and I’ll be happy to consider them for inclusion!

https://arxiv.org/abs/2501.12948

https://huggingface.co/deepseek-ai/DeepSeek-R1-Zero

https://www.reuters.com/technology/chinas-deepseek-sets-off-ai-market-rout-2025-01-27/

https://oll.libertyfund.org/titles/jevons-the-coal-question

https://www.theguardian.com/us-news/2025/jan/20/trump-inauguration-tech-executives

https://www.energy.gov/articles/doe-releases-new-report-evaluating-increase-electricity-demand-data-centers

👏🏾

Amazing post, thanks for sharing about transfer learning👏🏻